Using AI to Create Marketing Assets? Don’t Let Aversion Kill Your Engagement (Research-Backed Analysis & Guide)

Is it OK to use AI to create marketing assets?

If quality is unaffected (or even better), does it matter? Why would consumers care if they get the same results—from a useful whitepaper, say? Or perhaps it’s only a matter of time before AI-generated ads are pervasive. In this case, familiarity doesn’t breed contempt.

The problem with these positions is they fail to account for a powerful, often overlooked engagement killer: algorithm aversion. It’s a proven, well-researched phenomenon that describes how consumers devalue AI-generated assets simply because they’re generated by AI.

I spent over a month looking at the latest research into AI aversion and spoke to some of the leading researchers in the field.

Two things became clear. First, there are huge opportunities to use AI in consumer-facing marketing without drawing negative reactions. Second, there is a sizable and surprising amount of nuance. And the risks of getting it wrong are very real.

How Pervasive Is AI Use in Consumer-Facing Marketing?

To what extent are marketers relying on AI to create assets?

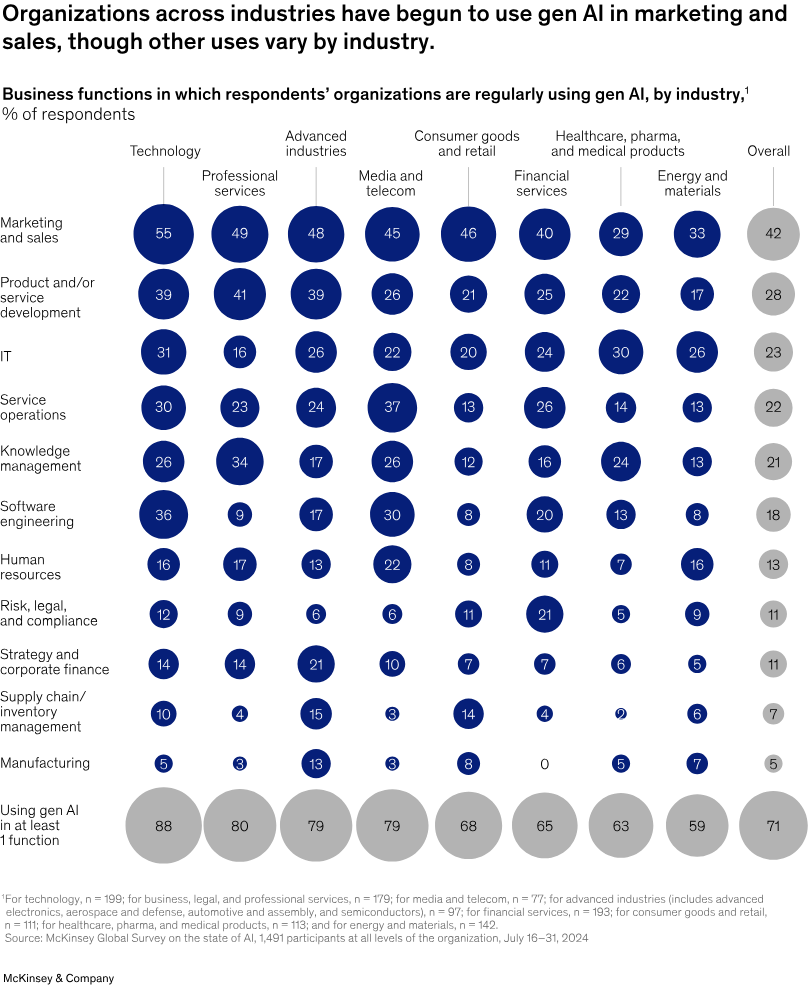

Using AI in marketing is one of its most common applications, according to Gartner’s most recent State of AI report, with 42% of marketers indicating adoption across their workflows. A 2025 Salesforce survey puts that figure higher at 51%, with content and ad copy composition as the primary uses. And HubSpot’s 2025 State of Marketing Report also found that image and text creation was the top use case, with one in five marketers planning to use AI for end-to-end campaign execution in the near future.

There are also plenty of anecdotal accounts that support this data. Dave Baker runs a leading B2B proofreading agency called Super Copy Editors. He deals with a voluminous amount of marketing materials, and his experience mirrors the preceding stats:

“AI-generated content really started ramping up around 2023, and now I routinely see the telltale signs of it. It’s not just social media content or blog posts, either. It’s clearly being used in reports, proposals, and other high-stakes content. It’s everywhere.”

There have also been several examples of fully AI-generated assets used in high-profile ad campaigns.

Coign, for example, claims to have produced the world’s first fully AI-generated TV ad.

Palo Alto released a ten-video campaign in September 2025 titled “Be A Genius, Deploy Bravely.” The videos were created with a mix of AI tools: Google Veo, Gemini, and Artlist.

CMO Kelly Waldher said, “We’re embracing AI to not only tell our story but to set a new industry benchmark for speed, engagement, and efficiency.”

You may also have seen Coca-Cola’s AI-generated holiday ads (widely denounced as slop by many critics), released in late 2024. I’ll leave you to judge the quality.

Marketers are using AI to create their assets. And it’s a trend that’s only going to gather momentum if the noise that leaders are making is anything to go by.

What’s more, it’s something that is being used at every stage of the asset creation process, from strategization and planning right through to the living incarnation of Isaac Newton you see in the preceding video.

Judging from the ads above, this approach seems to make sense. The quality is decent enough (and getting better), and AI opens up creative possibilities at a fraction of the normal price.

The problem is that AI aversion doesn’t care about quality. You can get everything right but still turn prospects off. And that’s why you need to understand what AI aversion is, including the most common myths about it. Armed with this knowledge, you can then put together a plan to address its causes.

The 3 AI Myths Putting Marketing Campaigns at Risk

AI aversion describes reluctance among consumers to engage with AI-generated outputs and AI interfaces over human counterparts. It’s a form of algorithm aversion, which is well-documented and has been seriously studied since the mid-2010s.

In a recent literature review, Jussupow and others describe algorithm aversion as a “biased assessment of an algorithm which manifests in negative behaviours and attitudes towards the algorithm compared to a human agent.”

Importantly, it’s widespread in marketing. A meta-analysis that looked at the use of AI specifically in consumer-facing business contexts found it to be pervasive and well-entrenched:

“While we observe a tremendous uptake in the use of AI-based products and services across virtually all markets, consumer segments, and businesses, a large body of research has demonstrated overarching aversion toward AI.”

What I found most striking as I dug deeper into the research—and spoke to people working at the vanguard of this topic—is how many of our intuitions about AI fall wide of the mark. In particular, there are three that stand out.

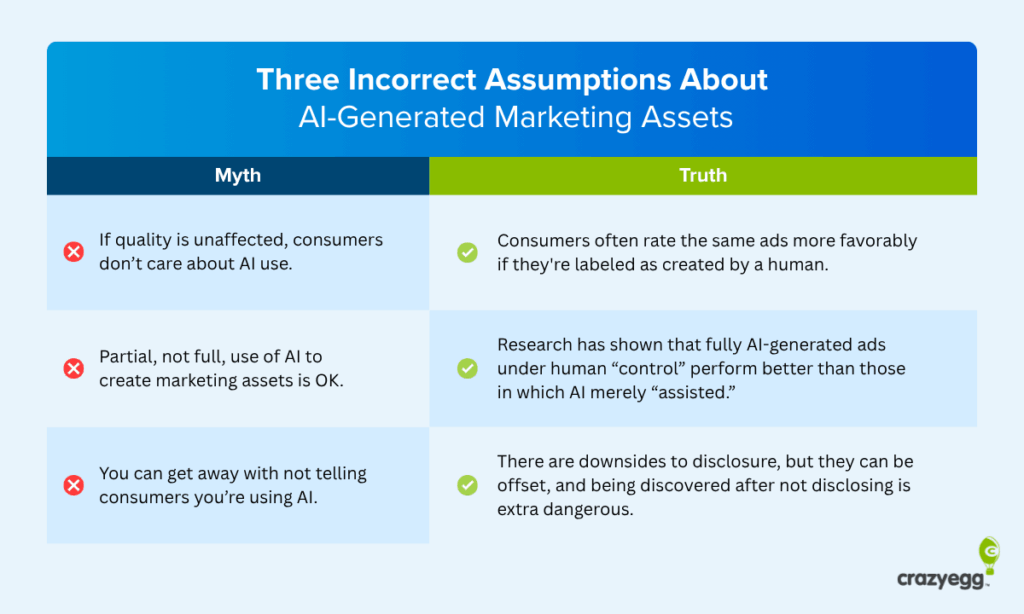

Myth #1: If quality is unaffected, consumers don’t care about AI use

This is one of the most widespread myths around when it comes to AI use in marketing. It’s also point-blank not true. Research has repeatedly shown that AI aversion is largely independent of quality.

A 2025 study that examined evaluations of content labelled as either “AI,” “human-AI collaboration,” or “human” found that “explicitly disclosing AI involvement in creative works consistently influences perceptions, even when the underlying content remains identical.”

Here’s a snippet from a 2024 study on the same topic: “While the raters could not differentiate the two types of texts in the blind test, they overwhelmingly favored content labeled as ‘Human Generated,’ over those labeled ‘AI Generated,’ by a preference score of over 30%.”

In addition, research conducted in 2023 uncovered a similar, but more nuanced, effect. It found that when the origin of a piece of marketing content was revealed, recipients increased their estimation of the human content. This effect was labelled “human favoritism.” The paper reads, “Knowing that the same content is created by a human expert increases its (reported) perceived quality, but knowing that AI is involved in the creation process does not affect its perceived quality.”

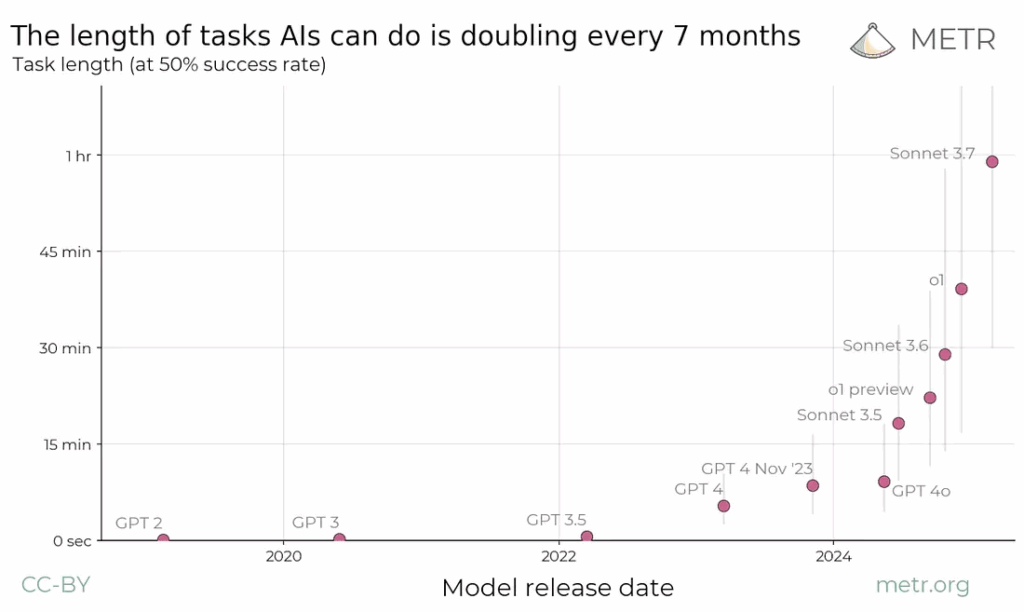

If the current trajectory continues, most if not all AI-generated assets will soon be indistinguishable from those created by humans. The speed and reliability with which AI can complete tasks is improving exponentially. That’s what analysis by the METR found when it measured AI competency against a benchmark of complex real-world tasks.

As AI expert and futurist Benjamin Todd writes, “If this trend continues, AI models will be able to handle multi-week tasks by late 2028 with 50% reliability (and multi-day tasks with close to 100% reliability).”

There is a serious danger for marketers that work on the basis that consumers don’t care. Quality that equals, and even surpasses, human content is coming. Many would argue it’s already here. And marketers that assume that’s the end of the story may well find themselves making their campaigns worse by accident.

Myth #2: Partial—not full—AI involvement is OK

This is another widely touted misassumption. It usually finds expression as “working with AI as an assistant” or as “part of the research process.”

Dr. Martin Haupt, Professor for Digital Marketing at Albstadt-Sigmaringen University, was kind enough to speak to me about his recent research in this area. He found that human-generated marketing materials labelled as made with “AI assistance” underperformed compared to the same materials labelled as generated by AI under “human control.”

“We found that people do not care about the level of AI involvement—a presumption that has been around for years now in which people say, ‘Do not let AI take over the full task, people have to detect the human work in it.’ Instead, our research showed that people just need the security or assurance from the company that there is a human on the lever taking responsibility.”

The 2024 study by Dr. Haupt and his team looked at algorithmic aversion to AI-human hybrid content in a marketing context, in this case an ecommerce description and a company vision statement:

“…not every form of human-AI collaboration was proven to be effective. In particular, using AI as author with a (final) human control led to comparable message credibility to sole human authorship. In contrast, a human author and AI support led to lower message credibility and reduced consumers’ attitudes towards the company…”

In the experiments, the (identical) AI-supported content was labelled “Written by Mary Smith supported by Artificial Intelligence,” and the human-controlled AI content was labelled “Generated by Artificial Intelligence controlled by Mary Smith.”

That’s a difference of one word. For marketers the conclusion is clear: don’t emphasize the limit of AI in your work. And certainly don’t describe it as an assistant. Instead, make sure you are highlighting how a human is in “control” of the process and responsible for all outputs. This is the assurance and oversight that customers are looking for.

Myth #3: It’s better not to tell consumers you’re using AI

It’s true that people can’t accurately distinguish between good AI and human content. A major study conducted by a team at Cornell University looked at human detection across a range of media channels and countries, concluding that “state-of-the-art forgeries are almost indistinguishable from ‘real’ media, with the majority of participants simply guessing when asked to rate them as human- or machine-generated.”

In addition, a review of existing research—aptly titled The transparency dilemma—found that disclosure actually increases mistrust in AI outputs and those using AI.

The logical conclusion? Don’t tell consumers that you’re using AI. They can’t tell the difference, and even if you do disclose, you’re shooting yourself in the foot.

Well…not quite.

The risk of discovery of AI use after not disclosing is particularly severe. Get into the small print and there’s an interesting insight. The study cited above (The transparency dilemma) found that actors who were discovered using AI without disclosure were trusted least of all. There have been some particularly notable cases of this happening with video content—from Will Smith and J. Crew—where disclosure of AI use after viewers noticed distortions intensified backlash.

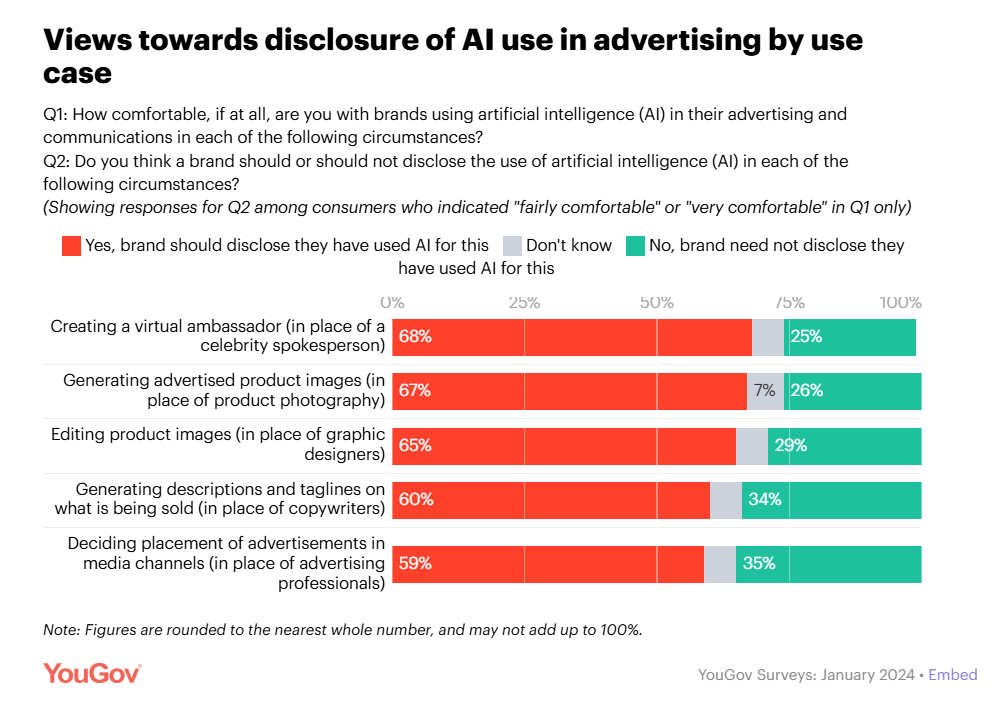

Even setting aside brewing legislation, the issue falls firmly on the demand side of the equation. More and more people are calling for disclosure. 76% of American consumers think that disclosure of AI use is important, according to the 2024 Ethical Marketing Survey conducted by Carson Business School. A global YouGov survey also found that the majority of consumers want AI disclosure across a whole range of domains.

This is a conundrum. Consumers are asking for disclosure, but disclosure increases mistrust and aversion. When I spoke to Dr. Haupt, he argued in favor of “transparent human-AI collaboration to not alienate customers.”

The key is the manner in which AI is disclosed. If marketers can account for other factors effectively—emphasizing human control, humanizing content, and accounting for nuances between formats—the benefits of transparency will likely outweigh the risks in many cases.

All of which leads nicely onto the next question—what does effective use of AI look like?

AI Can Provide Incredible Results but Delivery and Context Are Everything

How can companies, especially smaller and mid-sized ones, begin to make sense of this?

Despite the risks of AI aversion, the opportunities of careful adoption are immense. They point to a world in which the creation of high-quality, targeted marketing assets benefits consumers and is feasible for all sizes of businesses.

The key point to keep in mind, however, is that context, medium, and labeling are everything.

I spoke at length with Professor Andrew Smith, Chair of Consumer Behaviour & Analytics at N/LAB, Nottingham University Business School. He doesn’t believe that algorithm aversion will excessively prohibit the use of AI in consumer-facing marketing over the long term.

For him, AI in marketing is part of the “evolution of the consumer as a decision-maker and actor in a symbiotic system of exchange.” He predicts that the most “potent” consumer-facing AI marketing applications will meet three criteria:

- AI marketing will mirror human interactions (humanistic).

- Content will be individualized where possible and appropriate (personalized).

- Both transparent (overt) and hidden (covert) AI use will be effective, depending on context.

If companies account for these factors, he argues, they’ll generally sidestep AI aversion as usage becomes more normal and widespread. In his book Consumer Decision-Making, Analytics and AI, he writes:

“HPO [humanistic, personalized, overt] and HPC [humanistic, personalized, covert] will become more common and more potent; more persuasive and more interactive like human actors or agents… This would represent an augmentation and refinement of existing technology, not an entirely new manifestation…”

Context matters. Reactions to AI vary substantially across industries, domains, and individual use cases—recommending a new series on a streaming platform, for example, versus diagnosing an illness. Marketers should need to account for this, recognizing that while AI use may be acceptable in one area, it can trigger aversion in another.

A major-meta analysis published this year highlighted this “context dependency” in multiple consumer spheres, showing greater aversion in high-stakes applications versus mundane ones:

“…our findings demonstrate that consumer responses are highly context-dependent and vary systematically by AI label, with the most negative responses toward embodied forms of AI compared to other AIs such as AI assistants or mere algorithms, and substantial domain differences with areas such as transportation and public safety compared to areas where AI improves productivity and performance.”

Research of this type points to a clear conclusion: AI aversion exists, but it’s highly nuanced. Marketers that want to figure out how to use AI to make their marketing workflows more efficient need to account for this “context dependency” alongside how they label and humanize their assets.

How to Use AI to Create Marketing Assets Without Losing Customers

There are a series of relatively straightforward steps that companies can take to ensure “safe” use of AI in consumer-facing marketing. One that reaps the benefits of AI while mitigating the dangers of alienating customers.

1. A/B test and offer human options where possible

Understanding consumer preferences across domains, let alone individual segments, is easier said than done. Most companies don’t have the traffic or resources to conduct extensive A/B and user tests or gather extensive in-house data from surveys and forms.

Dr. Mats Georgson, CEO of Georgson and Co., says, “There are ways to get a fairly rapid response on whether people like AI-generated stuff or not and whether it moves the needle.” He is, however, keen to point out it will be harder for those who aren’t committed to quality: “Bad ads are just bad ads, whether AI did them or not.”

Assuming that AI slop isn’t an issue, there are two immediate ways to gauge customer sentiment without sample sizes of tens of thousands of customers: test against human creatives on third-party platforms and offer human options.

2. A/B test on PPC platforms

PPC advertising platforms like Google Ads and Meta Ads make running experiments relatively simple without the quantities of traffic required for in-house A/B testing.

Rather than jump into AI advertising hook, line, and sinker, you can use these platforms to rigorously test AI ads and copy against the assets of your human team.

They offer a valuable opportunity to try different labeling strategies and degrees of AI humanization, like using AI avatars or AI-generated voiceovers. You can also test more outlandish content, which has been shown to drive higher click-through rates in certain circumstances.

This will have the effect of showing you clearly where AI offers enhancement and where human input remains most effective.

Given that marketing teams are rapidly adopting AI (often without thinking of aversion), it will provide important nuance and understanding of context dependency, especially as marketing creatives transition, at least partly, into an engineering role.

3. Allow for human options where possible

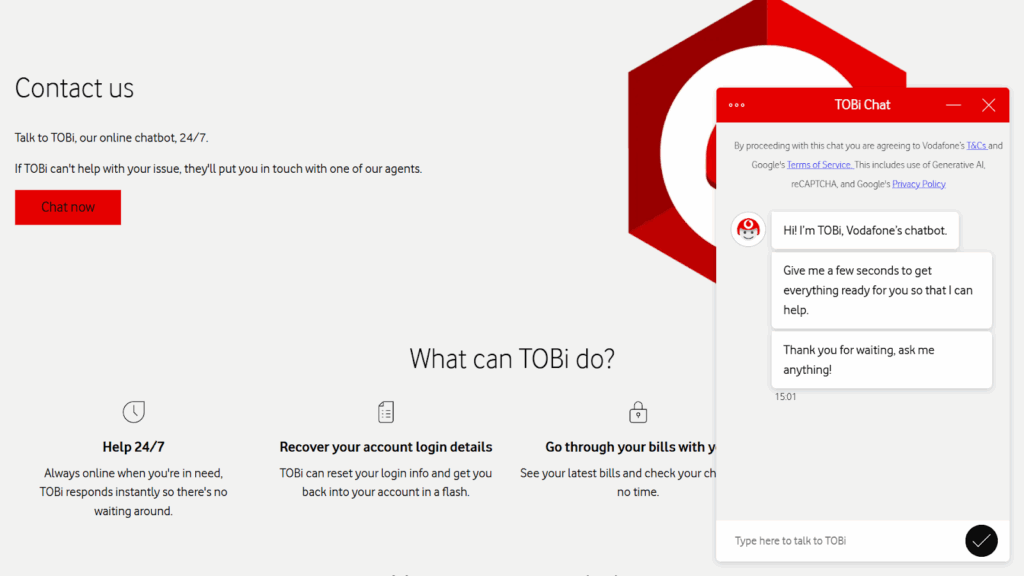

Using a chatbot on your landing pages?

Display an option to speak to a human representative.

Using AI-generated content in your newsletters?

Show a toggle to “opt out of AI content” on your subscriber preferences page.

Including product recommendations on your ecommerce site?

Allow users not to see AI-tailored content.

Letting your customers opt for human alternatives gives you immediate feedback about AI acceptance and preference. It also highlights potential trust and transparency gaps in your current AI deployment. High uptake of alternate human options likely indicates an issue. It acts as an opportunity to test different labels, levels of humanization, and, where possible, personalization.

4. Emphasize human control over AI usage

Lack of perceived control over AI outputs is a key driver of algorithm aversion. What’s surprising is that consumers are willing to “transfer” this need to the creators of content. If a human is seen as being “on the lever,” aversion is reduced.

The key here is to emphasize control, not assistance. Dr. Martin Haupt’s study (cited earlier) showed that people were receptive to full AI use as long as it was under the oversight of a human actor.

You can demonstrate human control by including a photograph of the human in control, drawing attention to comprehensive fact checks (this also reduces the problem of lingering fear around AI hallucinations), and explaining, where possible, why you are using AI and the benefits to consumers.

In addition, research has shown that human involvement increases the “perceived capabilities of AI,” thereby reducing aversion:

“…human involvement seems to reduce aversion through increasing the perceived capabilities of the algorithm…as users prefer algorithms which were explicitly framed as trained by humans.”

Use the phrase “created under human control” or a similar variant. And avoid “assistance,” as this has been shown to increase aversion across various contexts.

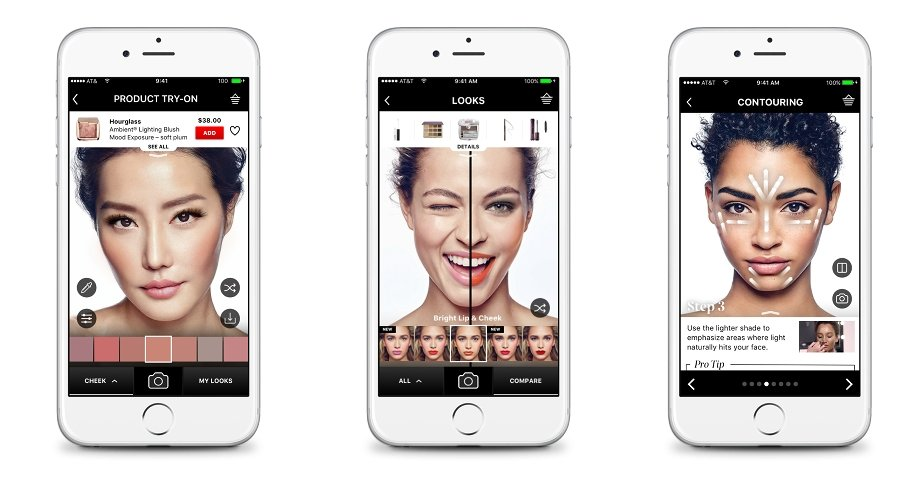

5. Humanize AI-generated content

Humanization is the process of making AI outputs authentic, relatable, and empathetic.

Consumers generally show more satisfaction when interacting with anthropomorphized, or human-like, algorithms.

One interesting study looked at this specifically in the finance industry and found that use of AI avatars successfully reduced AI aversion:

“Humanizing the financial advice from an algorithm with an avatar that promotes the perception of competence effectively reduces algorithm aversion and can enhance reliance on the financial advice of robo-advisors.”

Here are some examples of how to humanize AI materials:

- Natural language flow: When asked why Spotify used a human-like voice for its AI DJ, Product Director Zeena Qureshi said, “We know that human voice helps people form connections, and the same is true when it comes to DJ. We found that having the voice sound human is key for users to foster a deeper connection with DJ, as a human voice provides familiarity and instant context.”

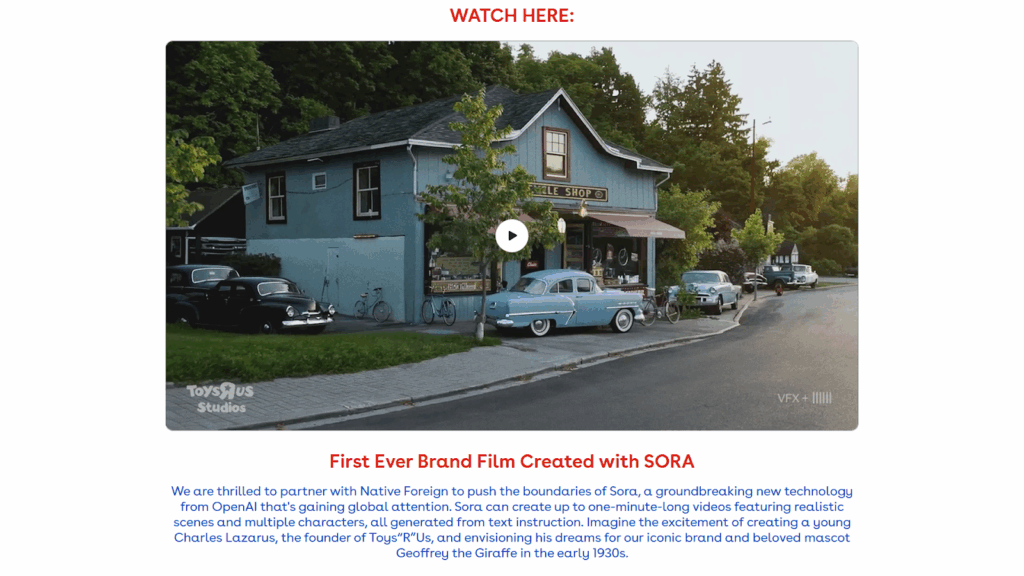

- Storytelling coherence (logical, plausible flow): A Toys “R” Us ad—entirely AI-generated—used a human-like voiceover to narrate the story of its founder and how mascot Geoffrey the Giraffe came into existence.

6. Lean towards transparency initially (overt vs. covert)

Should you disclose your use of AI or keep it quiet?

The research into disclosure is mixed. It’s not always necessary to label assets that have been created with AI—Dr. Smith’s prediction is that both overt and covert use of AI will become prevalent and powerful. However, it is better to err on the side of transparency, especially in the absence of domain-specific data.

While disclosure of AI use has been shown to diminish credibility, research in some areas has also demonstrated that covert use—where AI is hidden—has negative consequences if discovered.

Similarly, disclosure isn’t all bad. A study that looked at Instagram ads and disclosures found that “consumers felt less manipulated when viewing an AI disclosure, which led to an increase in source credibility and brand attitude but to a decrease in advertising attitude.”

It’s important to remember that mitigating AI aversion is about taking a multi-faceted approach. While disclosure may reduce advertising attitude (overall sentiment towards an ad), the risk of discovery of covert practices outweighs the short-term negatives. What’s more, humanization, emphasis of control, and personalization will help mitigate this additional aversion.

Over time, you can experiment with removing disclosures—especially on low-stakes assets like FAQs. But don’t run the serious risk of alienating customers when you’re not certain of their preferences.

7. Take a domain-specific approach

Something that the researchers I spoke to emphasized time and again was that AI aversion is highly domain specific. It can manifest in different ways depending on context, especially in morally critical areas like messages related to politics and weapons.

Don’t take a pray-and-spray approach. Trial marketing assets with specific channels and audience segments. It’s entirely plausible, for example, that a certain audience group with deep topical knowledge might be more averse than one with only entry-level understanding.

Dr. Haupt recommends starting with “non-sensitive” fields such as blogs, news sections, messages about innovations and products, and social media content without direct implications for consumers (no changes of their conditions, prices, etc.).”

Here are some guidelines to get started:

Pulling All These Insights Together

I became fascinated by the topic of algorithm aversion because so few marketers are talking about it. Yet it seems to me to be of crucial importance.

Generative AI offers incredible opportunities for efficiency and personalization in marketing.

However, failure to account for the nuance of how AI aversion manifests can quickly put consumers off. What’s more, immediate engagement isn’t the only issue. The risk of long-term brand damage down the road is also serious.

There are dangers to being an AI pioneer. There are also equal, perhaps greater, dangers to adopting an “all human” approach, given the fact that your competitors will almost certainly be benefiting from the efficiency gains that AI can provide.

The answer is to listen to consumers as closely as possible with the resources you have. At the same time you should focus on humanization, control, and smart transparency.

Combine these elements and you have a safe route forward, one that protects you both now and in the far future.

References

Frank, J., Herbert, F., Ricker, J., Schönherr, L., Eisenhofer, T., Fischer, A., Dürmuth, M., & Holz, T. (2024). A representative study on human detection of artificially generated media across countries (pp. 55–73). https://doi.org/10.1109/SP54263.2024.00159

Ganbold, O., Rose, A. M., Rose, J. M., & Rotaru, K. (2022). Increasing reliance on financial advice with avatars: The effects of competence and complexity on algorithm aversion. Journal of Information Systems, 36(1), 7–17. https://doi.org/10.2308/ISYS-2021-002

Haupt, M., Freidank, J., & Haas, A. (2025). Consumer responses to human–AI collaboration at organizational frontlines: Strategies to escape algorithm aversion in content creation. Review of Managerial Science, 19, 377–413. https://doi.org/10.1007/s11846-024-00748-y

Heimstad, S. B., Wien, A. H., & Gaustad, T. (2025). Machine heuristic in algorithm aversion: Perceived creativity and effort of output created by or with artificial intelligence. Computers in Human Behavior: Artificial Humans, 5, 100190. https://doi.org/10.1016/j.chbah.2025.100190

Jussupow, E., Benbasat, I., & Heinzl, A. (2020). Why are we averse towards algorithms? A comprehensive literature review on algorithm aversion. In ECIS 2020 Research Papers (Paper 168). https://aisel.aisnet.org/ecis2020_rp/168

Garvey, A. M., Kim, T., & Duhachek, A. (2023). Bad news? Send an AI. Good news? Send a human. Journal of Marketing, 87(1), 10–25. https://doi.org/10.1177/00222429211066972

Gomes, S., Lopes, J. M., & Nogueira, E. (2025). Anthropomorphism in artificial intelligence: A game-changer for brand marketing. Future Business Journal, 11, 2. https://doi.org/10.1186/s43093-025-00423-y

Lermann Henestrosa, A., & Kimmerle, J. (2024). The effects of assumed AI vs. human authorship on the perception of a GPT-generated text. Journalism and Media, 5(3), 1085–1097. https://doi.org/10.3390/journalmedia5030069

Schilke, O., & Reimann, M. (2025). The transparency dilemma: How AI disclosure erodes trust. Organizational Behavior and Human Decision Processes, 188, 104405. https://doi.org/10.1016/j.obhdp.2025.104405

Smith, A., Lukinova, E., Harvey, J., Smith, G., Mansilla, R., Goulding, J., & Avram, G. (2025). Consumer decision-making, analytics and AI. https://doi.org/10.4324/9781003507482

Song, M., Jiang, W., Xing, X., Mou, J., & Duan, Y. (2026). The more human-like the better? Effect of anthropomorphic level on customer intention to participate in AI service recovery. Technology in Society, 84, 103065. https://doi.org/10.1016/j.techsoc.2025.103065

To, R., Wu, Y.-C., Kianian, P., & Zhang, Z. (2025). When AI doesn’t sell Prada: Why using AI-generated advertisements backfires for luxury brands. Journal of Advertising Research. https://doi.org/10.1080/00218499.2025.2454120

Wang, Y., Chen, H., Wei, X., Chang, C., & Zuo, X. (2025). Trusting the machine: Exploring participant perceptions of AI-driven summaries in virtual focus groups with and without human oversight. Computers in Human Behavior: Artificial Humans, 6, 100198. https://doi.org/10.1016/j.chbah.2025.100198

Wortel, C., Vanwesenbeeck, I., & Tomas, F. (2024). Made with artificial intelligence: The effect of artificial intelligence disclosures in Instagram advertisements on consumer attitudes. Emerging Media, 2(3), 547–570. https://doi.org/10.1177/27523543241292096

Zehnle, M., Hildebrand, C., & Valenzuela, A. (2025). Not all AI is created equal: A meta-analysis revealing drivers of AI resistance across markets, methods, and time. International Journal of Research in Marketing, 42(3, Part B), 729–751. https://doi.org/10.1016/j.ijresmar.2025.02.005

Zhang, Y., & Gosline, R. (2023). Human favoritism, not AI aversion: People’s perceptions (and bias) toward generative AI, human experts, and human–GAI collaboration in persuasive content generation. SSRN. https://doi.org/10.2139/ssrn.4453958

Zhu, T., Weissburg, I., Zhang, K., & Wang, W. (2025). Human bias in the face of AI: Examining human judgment against text labeled as AI generated. Findings of the Association for Computational Linguistics, 25907–25914. https://doi.org/10.18653/v1/2025.findings-acl.1329