Governance-first and LLM-agnostic Intake AI agent

Editor’s note: Hadeel discusses an intake and triage AI agent blueprint by ScienceSoft, first presented at WHX Tech 2025 in Dubai. She shows how orchestration with explicit guardrails removes the need for costly LLM fine-tuning while keeping the agent safe, compliant, and Arabic-capable. She also addresses practical questions raised by GCC healthcare leaders at the event. If you want to discuss feasible AI launch paths for your organization, Hadeel and other AI development consultants at ScienceSoft are ready to assist.

Many remain unaware of how medically capable frontier general LLMs have become. Common sense suggests that triage and diagnostics should be reserved for models trained specifically on medical data. Yet studies consistently show that in terms of clinical reasoning, general LLMs match specialized models. Some evaluations even show parity with experienced doctors and doctors assisted by AI.

- A 2025 Nature Medicine study found no clear diagnostic-accuracy advantage for a custom medical model (Med-PaLM 2) over GPT-4 (e.g., 72.3% vs. 73.66% on a large multiple-choice test set).

- HealthBench, with 5,000 patient-AI dialogues graded by 262 physicians, reported GPT-3o outperforming unaided physicians (~60% vs. ~30%) and matching physicians assisted by GPT-3o (~60% vs. ~60%)

That is why healthcare AI engineers’ practical focus is now on orchestration and guardrails for general LLMs — not on the training of specialist models. Costly LLM fine-tuning is reserved for narrow, high-risk niches (e.g., rare disease diagnostics or pediatric triage). In lower-risk fields, orchestration does most of the work, making AI agents clinically safe, compliant, and capable of providing a smooth experience for both patients and doctors.

The solution described in this article, an AI agent for patient intake and triage designed for the Gulf region, shows how this approach works in practice.

AI Agent Architecture That Makes Orchestration Replace Fine-Tuning

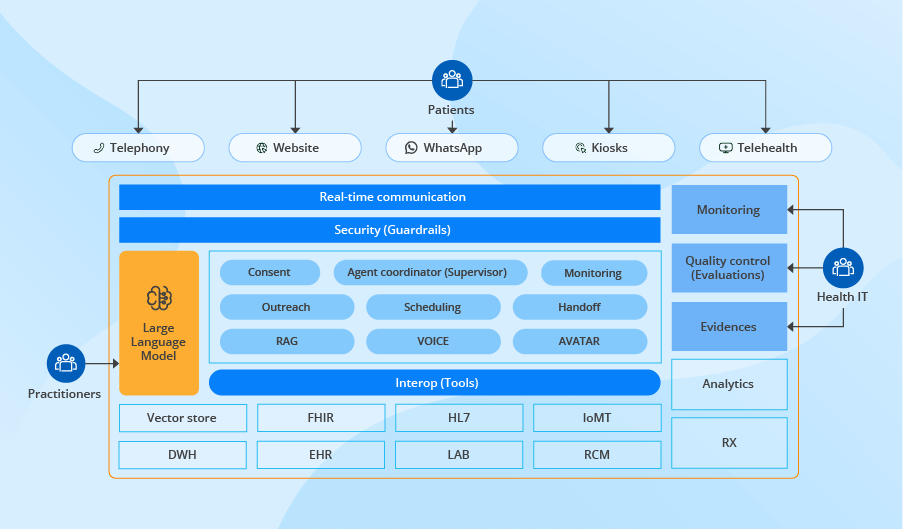

Below is a blueprint of a GCC-oriented intake and triage AI agent designed for the region’s language mix and data protection rules. The blueprint targets these core capabilities:

- Omnichannel text and voice communication (via telephony, website chats, mobile apps, kiosks, or telehealth platforms) in Arabic and English, including major Arabic dialects, Arabizi, and code-switching.

- Single-session consent, symptom, and history capture, and real-time eligibility checks.

- Red-flag symptom detection with immediate clinician handoff.

- Venue recommendation and booking, with a structured ISBAR note posted to EHR.

The architecture blueprint below shows how a policy-first orchestration layer drives the flow while keeping safety and compliance outside the LLM for efficiency, transparency, and fast change control.

Unified patient channels

All patient entry points (such as telephony, website chats, mobile apps, self-service kiosks, and telehealth platforms) are orchestrated through a single real-time communication layer. It maintains a per-intake state in a channel-agnostic context store after identity and consent are verified. Channel adapters bind to this state, so a patient can start on the phone and finish on screen without repeating a word. The layer routes structured requests to consent capture, eligibility verification, scheduling, and EHR update in the same flow, which increases intake completion and reduces patient drop-offs.

Policy-driven agent workbench

At the center sits a supervisor service that applies one policy across every step and decides which service acts next across the flow. For example, it triggers the outreach service when patient information is insufficient, or the scheduling service to set the visit time.

The same control path governs the language services that secure Arabic precision, the safety layer that guards clinical risk, and the compliance controls that keep every data move within Gulf rules:

- Arabic specifics support: The retrieval (RAG) service pulls local clinic rules, referral paths, and payer instructions in Arabic and English from a vector store, so the AI agent follows live policy without LLM weight edits. Voice and video avatar services support English, Arabic, major dialects, Arabizi, and mixed speech. They rely on a frontier generalist LLM pretrained on massive multilingual corpora. This approach is reasonable as high-quality Arabic medical data are too scarce for safe fine-tuning, while general models already handle Arabic with reliable accuracy. The agent’s safety guardrails log dialect recognition confidence scores, and when they dip, the supervisor asks a clarifier question or moves the session to staff.

- Clinical safety services: The monitoring service reviews each patient’s input for red flag indicators such as bleeding in pregnancy or stroke warning signs and passes a stop signal to the handoff service, which routes the session to nursing staff or the emergency line with a time-stamped context bundle. This approach was validated in clinical-grade LLM triage testing, which showed that general models can reliably recognise urgent cases and trigger timely escalation.

Confidence checkpoints apply the same guard, asking a clarifier question or yielding to a human when certainty drops, and scope walls keep the agent out of diagnosing or prescribing. A quality‑control queue draws every transcript from the evidence log for spot clinician reviews, scoring policy adherence, and feeding improvements back into guardrails.

- Compliance controls: The consent service records explicit patient authorization in line with GCC data‑protection codes (e.g., Saudi Arabia’s PDPL or UAE’s PDPL) and then stamps a signed token into the evidence log. That tamper-evident log captures every prompt, policy decision, and escalation and stays in the region for mandated retention.

Large Language Model (LLM)

The LLM sits in a swappable slot inside the stack, so the orchestration can move from a GPT class engine to Claude with the same tool instructions. Safety rules, confidence checks, and audit logs live in the guardrail layer, which means the healthcare provider can add a new policy or adjust a threshold without LLM retraining, and every trigger or handoff remains clear to reviewers.

Security guardrails

Each request passes a security engine that checks scope and risk, attaches a plain language rationale, and writes the record to an immutable ledger that remains in GCC data centers. The same layer enforces least-privilege access and blocks any call that tries to cross a regional boundary. It also hides all data from the LLM except the details that are strictly needed for a given step, to prevent unnecessary exposure, and follows short retention rules. Thus, auditors see a complete trail, and the provider meets GCC data codes, while clinicians still see a clear context.

Interoperability

A dedicated interoperability tier turns each intake event into a single standard FHIR or HL7 call for downstream systems, which checks insurance in the revenue cycle system (RCM), reads allergies and recent labs from the record, and writes the consent token with an ISBAR note back to the EHR chart during the same session. In this way, all traffic flows through one audited channel, and adding a new lab, pharmacy, or claims feed means mapping a profile, not rebuilding the agent.

Analytics

Events flow from the guardrails, the supervisor, and the interoperability layer bridge into a common stream, then land in analytics dashboards that track intake length, eligibility clears, red-flag counts, and escalation latency. The same events are anchored to patient and claim records in the enterprise data warehouse, which lets quality reviewers compare last week’s policy thresholds with this week’s denials and wait times. Because orchestration standardizes every event schema, these views hold when the model updates or a new hospital feed appears.

Implementation Realities: Questions GCC Healthcare Leaders Actually Ask

Executives always want practical detail, not pure theory. During ScienceSoft’s presentation of the intake and triage AI agent at WHX Tech 2025, I got plenty of questions. Here, I address the most frequent ones; they can be sorted into three general directions.

Where does the intake and triage AI agent fit into the current Gulf care?

Where to deploy the AI agent for the quickest payback

Based on my experience, three settings bring the quickest return: hospital front desks, polyclinic reception lines, and virtual lobbies that run on WhatsApp. They all struggle with two chronic bottlenecks: manual eligibility checks that stall queues and a patchy clinical context that forces nurses to re-interview every patient. In these entry points, an AI agent removes the wait by routing insurance queries and symptom capture through one real-time loop. The output reaches staff as a single, structured packet instead of fragmented calls and paper slips.

Specialty limitations

In day-to-day practice, such agents work well in primary care, general medicine, family practice, common dermatology, diabetes follow-up, and minor orthopedics. These cases dominate intake lines and share patterns that a generalist model plus local retrieval handles with stable precision.

Yet the effectiveness of AI agents drops in narrow specialties such as oncology, neonatology, transplant medicine, and rare disease clinics. Here, the data is sparse, local pathways vary, and risk tolerance is lower, so the agent may gather history yet must pass routing to licensed staff until larger specialty-specific datasets prove parity with clinician triage.

Use in emergency departments

The agent can add value to emergency departments, yet only as a data aide. It can capture consent, symptoms, and vitals while the patient travels or waits, then load a structured note into the chart. But it must not assign acuity, choose a bed, or override a nurse. GCC clinical policies require a licensed professional to set the Emergency Severity Index or any equivalent scale.

Is the agent feasible for small healthcare providers?

If you run the AI agent in the cloud and use patient phones as the only hardware, the capital expense is low enough. Small providers need just two integrations, an eligibility feed and an appointment calendar, and must assign a clinician or senior administrator to review red-flag sessions and monitor dialog quality. While big hospitals may gain faster ROI from real-time eligibility checks covering hundreds of daily encounters, small providers still benefit through shorter queues, fewer rejected claims, and a lighter front-desk workload.

What are the prerequisites for a safe pilot?

Before the agent meets real patients, a Gulf provider prepares the following governance bundle that both proves regulatory compliance and feeds the agent’s policy engine:

Closing Note: Governance-First AI for Provider Wins

This intake and triage AI agent blueprint proves that a general-purpose LLM under clear guardrails does more than match a specialist medical LLM.

- A hospital or clinic can deploy it in a few months and spend a small share of the budget usually spent on model weight adjustment.

- The stack supports Arabic and its dialects out of the box.

- Consent texts, insurance checks, schedule logic, and red flag thresholds can be revised through one policy file instead of a new model training cycle.

- External guardrails record every action and enforce GCC data rules, so the agent stays compliant and auditable. Any new regulation triggers a quick policy edit, not a software rebuild.

Book a practical expert session

A dedicated session with ScienceSoft’s healthcare AI experts yields a practical roadmap covering priority use cases, GCC compliance checkpoints, integration steps, and budget-time estimates for Arabic-capable AI for patient engagement, clinical decision support, or clinical operations.

Book a practical expert session